Securing your HomeAssistant setup should be a priority, especially if you plan on accessing your system remotely. One of the best ways to do this is by setting up an SSL certificate. This article guides you through five easy steps to set up SSL on HomeAssistant using Let’s Encrypt Certbot.

Understanding the Importance of SSL for HomeAssistant

Secure Sockets Layer, popularly known as SSL, is a security protocol that encrypts the connection between a web server and a client. When implemented on your HomeAssistant, it prevents eavesdropping and tampering of your data by encrypting all communication between your HomeAssistant and your devices. This is crucial, especially when accessing your HomeAssistant remotely over the internet where your data could be intercepted.

Moreover, SSL also provides authentication, ensuring that you’re communicating with the right server and not a malicious one. This is achieved through the use of SSL certificates issued by trusted Certificate Authorities (CAs). These certificates also provide visual cues, such as a padlock symbol, giving end-users confidence that their connection is secure.

An Overview of Let’s Encrypt Certbot

Let’s Encrypt is a free, automated, and open Certificate Authority. It provides digital certificates needed to enable HTTPS (SSL/TLS) for websites. The Certbot is an easy-to-use client that fetches certificates from Let’s Encrypt and configures your web server to use them.

By using Let’s Encrypt Certbot, you can easily acquire and renew SSL certificates for your HomeAssistant. It automates the process of obtaining and installing SSL certificates, thereby saving time and eliminating the risk of manual errors. Moreover, it also handles the renewal of SSL certificates, ensuring that your connection remains secure.

Contrary to what seems to be the case for many, if not most, I find the use of third-party VPN solutions for accessing an otherwise cloud-free HomeAssistant setup to be illogical. Moreover, the notion of implementing the HomeAssistant Cloud service, Nabucasa, doesn’t appeal to me at all. The core of my philosophy is to maintain a smart home solution that is independent of both third-party and cloud services.

Step 1: Installing Let’s Encrypt Certbot

The initial step to enable SSL for your HomeAssistant involves installing Let’s Encrypt’s Certbot. The installation method differs across operating systems. On Linux systems, it’s straightforward to install Certbot using the package manager. For example, Ubuntu users can execute the command sudo apt-get install certbot.

My setup took a slightly different route. As previously mentioned, my HomeAssistant operates within a Docker container, and I also host several websites, including the one hosting this blog post, on a virtual machine. This VM shares the same server as the HomeAssistant Docker container. Installing Certbot on CentOS Stream, the operating system of my VM where SSL is primarily needed, was a breeze by simply following the guided instructions available on the Certbot website.

You can confirm the successful installation of Certbot by executing certbot --version in your terminal. This command should return the version number of Certbot installed on your machine. Should you encounter any issues, indicating that Certbot hasn’t been installed properly, you may need to address the installation process or attempt reinstalling it.

Step 2: Generating an SSL Certificate

With Certbot installed, the subsequent step involves generating an SSL certificate for your domains. In my experience, executing the command certbot --apache was a straightforward process. Certbot intelligently scanned all my Apache virtual hosts, generating certificates for each. Interestingly, it selected the first domain in the list as the root certificate for all others—a decision I wouldn’t have made intentionally, but one I’m content with nonetheless.

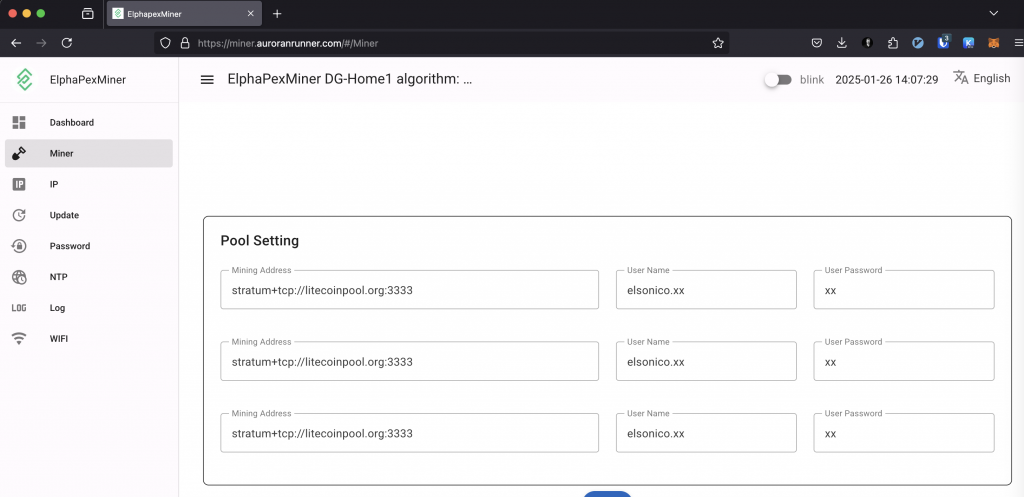

Aiming to secure a certificate for HomeAssistant as well, I introduced fake virtual hosts within Apache and initiated certbot --apache once more, this time specifying the addition of the exclusive HomeAssistant domain, which for me is ha.auroranrunner.com.

An alternative method involves the command certbot certonly --standalone. This approach instructs Certbot to secure a certificate by functioning as a temporary web server (standalone) to authenticate domain ownership—useful for situations requiring a more hands-off approach.

However, my objective was for Certbot to manage the certification updates for all domains collectively, thus I adopted a slightly different strategy.

Opting to exclusively focus on HomeAssistant, without intertwining Apache configurations, prompts a straightforward process. You’ll be asked to input your domain name along with your contact details. Upon submission, Certbot seamlessly liaises with the Let’s Encrypt Certificate Authority (CA), generating an SSL certificate for your domain. The newly minted certificate and its private key are securely stored in the directory /etc/letsencrypt/live/your_domain_name/.

Step 3: Setting SSL sync between primary host and secondary host

In my situation, it was necessary to establish a method for synchronizing the SSL certificates between the virtual machine hosting the Apache web servers and the server operating the HomeAssistant Docker container. To accomplish this, I undertook the following steps:

- Established passwordless SSH authentication between my Apache hosts and the server hosting HomeAssistant to ensure a seamless connection.

- Created a script located at

/usr/local/bin/sync_lets_cert designed to facilitate the synchronization of Let’s Encrypt certificates.

- Developed a systemd service aimed at automating the daily synchronization of Let’s Encrypt certificates between the two hosts, ensuring that both systems always use the latest SSL certificates.

- Configured a dedicated volume for the HomeAssistant Docker container mapped to

/etc/letsencrypt:/etc/letsencrypt. This setup allows the HomeAssistant container direct access to the synchronized SSL certificates, simplifying the process of securing communications.

The script located at /usr/local/bin/sync_lets_cert is responsible for synchronizing the SSL certificates between servers. Its contents are as follows:

#!/bin/bash

# Variables

SECONDARY_SERVER="my_vm_host_server"

DOMAIN="ha.auroranrunner.com"

LIVE_PATH="/etc/letsencrypt/live/$DOMAIN"

ARCHIVE_PATH="/etc/letsencrypt/archive/$DOMAIN"

DEST_LIVE_PATH="/etc/letsencrypt/live/$DOMAIN"

DEST_ARCHIVE_PATH="/etc/letsencrypt/archive/$DOMAIN"

# Sync the live directory

rsync -avz -e ssh $LIVE_PATH/ $SECONDARY_SERVER:$DEST_LIVE_PATH

# Sync the archive directory

rsync -avz -e ssh $ARCHIVE_PATH/ $SECONDARY_SERVER:$DEST_ARCHIVE_PATH

This script ensures that the certification files are kept in sync between the hosts. The next step involves setting up a systemd service to schedule this script’s execution, which proved to be slightly more complex but was successfully achieved as follows:

- Create a timer file at

/etc/systemd/system/sync_lets_cert.timer with the following content to establish a daily execution schedule:

[Unit]

Description=Daily timer for Let's Encrypt certificate sync

[Timer]

OnCalendar=daily

Persistent=true

[Install]

WantedBy=timers.target

- Then, create the service file

/etc/systemd/system/sync_lets_cert.service to define the synchronization task:

[Unit]

Description=Sync Let's Encrypt Certificates

[Service]

Type=oneshot

ExecStart=/usr/local/bin/sync_lets_cert

- Finally, start and enable the service and timer with the following commands:

systemctl start sync_lets_cert.service

systemctl enable sync_lets_cert.timer

With these steps completed, the SSL certificates will not only be renewed every 90 days but also synchronized between servers daily, ensuring seamless security and authentication continuity.

Step 4: Setting up SSL on HomeAssistant

With the SSL certificate secured, the following step is to integrate SSL into your HomeAssistant setup. This process entails adjusting your HomeAssistant’s configuration to recognize and utilize the SSL certificate. Achieve this by appending the below entries into your HomeAssistant’s configuration.yaml file:

http:

ssl_certificate: /etc/letsencrypt/live/ha.auroranrunner.com/fullchain.pem

ssl_key: /etc/letsencrypt/live/ha.auroranrunner.com/privkey.pem

base_url: https://ha.auroranrunner.com:8123

These lines instruct HomeAssistant on the locations of the SSL certificate (fullchain.pem) and its corresponding private key (privkey.pem). Post addition, a restart of your HomeAssistant is required for the adjustments to be applied.

Initially, setting up SSL without specifying base_url sufficed for web browser access. However, to ensure the mobile application functioned correctly, including the base_url became necessary.

Regarding domain registration, I own auroranrunner.com and manage its DNS settings via the AWS console. Given the dynamic nature of my IP address, I employ the dy.fi service to update the DNS record for my dy.fi domain automatically. On AWS Route 53, ha.auroranrunner.com is configured with a CNAME record pointing to sirius.dy.fi, a nifty setup. Thanks to my router’s dy.fi support, any alterations to my external IP are automatically synchronized.

Step 5: Troubleshooting Common SSL Setup Issues

While setting up SSL on HomeAssistant using Let’s Encrypt Certbot is straightforward, you might encounter some issues along the way. One common issue is the “Failed authorization procedure” error. This usually occurs when Certbot is unable to verify domain ownership. To resolve this, you need to ensure that your domain name is correctly pointed to your HomeAssistant’s IP address.

Another common issue is the “SSL connection error”. This usually occurs when HomeAssistant is not correctly configured to use the SSL certificate. To resolve this, you need to ensure that the paths to the SSL certificate and its corresponding private key in your HomeAssistant configuration file are correct.

Setting up SSL on HomeAssistant using Let’s Encrypt Certbot is a good way to secure your system. While the process might seem complex, it can be broken down into five easy steps: installing Certbot, generating an SSL certificate, setting up SSL on HomeAssistant, configuring HomeAssistant with the SSL certificate, and troubleshooting common SSL setup issues. By following these steps, you can secure your HomeAssistant and ensure that your data remains safe and private.

Conclusion

Implementing SSL with Certbot is relatively straightforward for those who are well-acquainted with their network setup. This approach offers a security advantage over depending on third-party VPN solutions, which merely introduce an additional layer to your existing infrastructure. Leveraging third-party services to manage your smart home system does not enhance security; rather, it compromises it. While VPNs can serve as a viable security measure for those lacking the expertise to properly configure their home networks, the assertion that third-party VPNs inherently bolster security is misleading.

For those considering a VPN, I advocate for hosting your own. In my experience, OpenVPN has been fully compatible with HomeAssistant, offering a cost-effective solution without the need for extra expenditures. Like the SSL setup, OpenVPN requires dynamic DNS unless you have the luxury of a static IP address, ensuring reliable and secure remote access to your smart home systems.

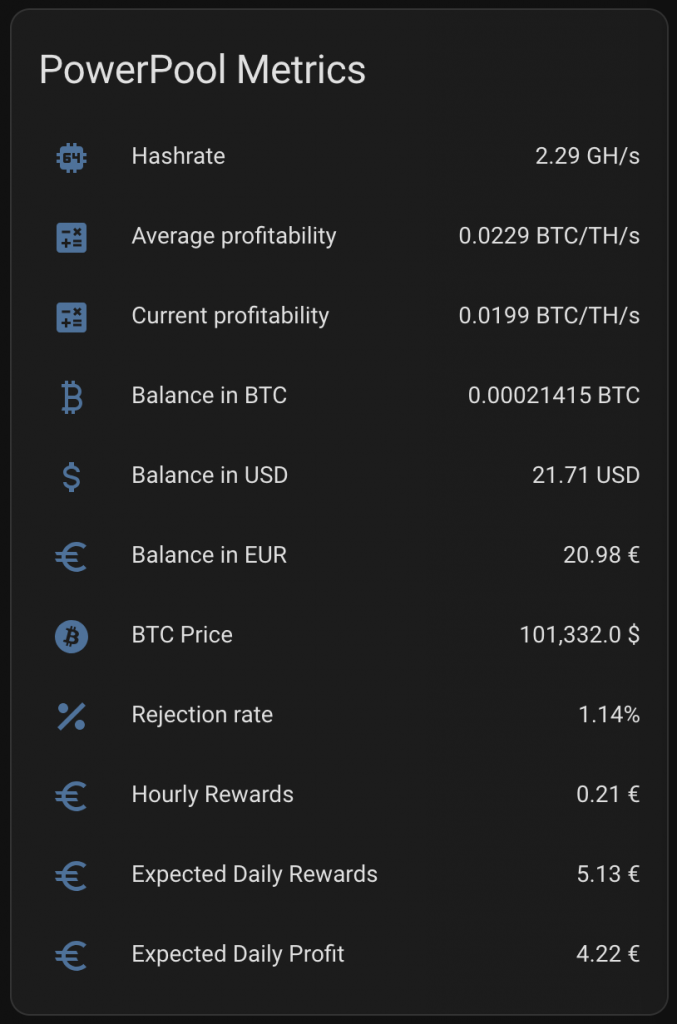

Donate

Wallet address of bitcoin

13JDhP1YScpJosCbkXwWUeS

Like this:

Like Loading...